Aside from forays into the realms deep into the left brain (read - machine learning), I am also a classical pianist. I started playing about 6 years ago and have continued ever since. Those of you aware of this realm would point out that the image used for the cover of this post is actually the first lines of Chopin’s Nocturne No. 20 in C-sharp minor (Op. posth.), the one used in the movie ‘The Pianist‘. This one of my favorite short pieces, both to listen and to play. You must be wondering why am I suddenly talking about music when I have posted mostly about computer science thus far. Well, there are more relations between the two than one would imagine in the beginning, the depths of which hopefully be clearer by the end of the next two paragraphs.

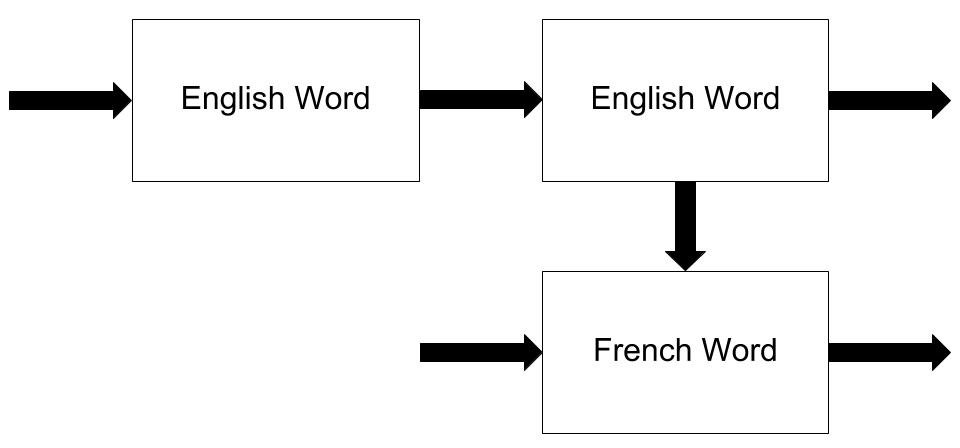

Leaving music aside for a moment, let’s talk about natural language processing (NLP), with the specific application of machine translation. The problem at hand being that given a sentence written in English, it must be translated to french by the machine. I will break down this seemingly complex task. Firstly, let’s talk about the mechanics of the problem. More formally speaking, we have as input a sequence of words and our output must also be the same (not necessarily of the same size). In this formalization, each word in the input sequence will some relation to the neighboring words in the input sequence and also has some relation to the output sequence. This can be represented as: This problem is known as a sequence to sequence learning problem. To tackle the same, one can say (just by intuition) that one would require a neural network with memory - a recurrent neural network (RNN). This so, because the network must be able to figure out the relation of the input words with the neighboring words in the sequence. Thus, a sequence to sequence problem is formulated as an encoder network (an RNN) cascaded with a decoder network (another RNN). The job of the encoder network is to interpret the information from the input sequence, essentially the meaning it is trying to convey. The decoder then conveys the meaning into the other sequence.

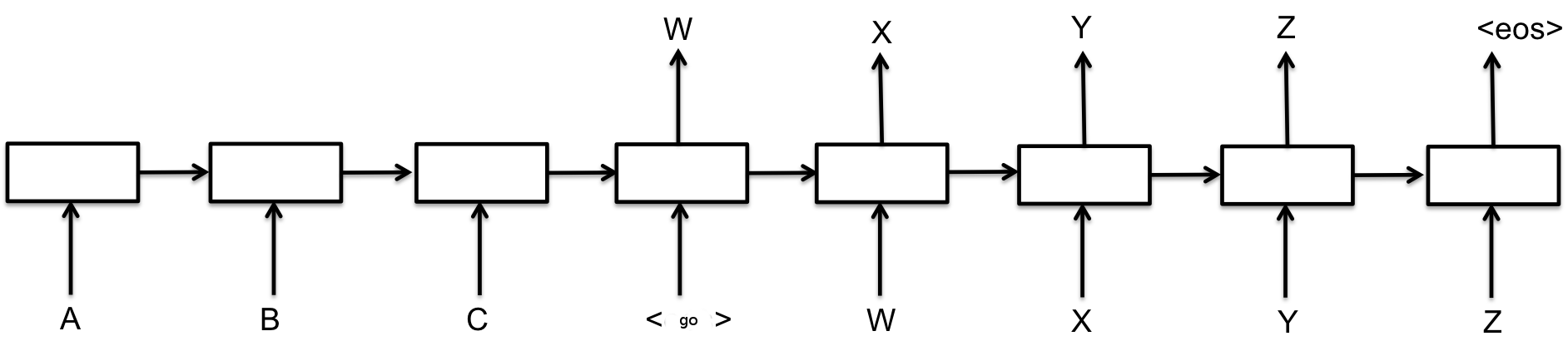

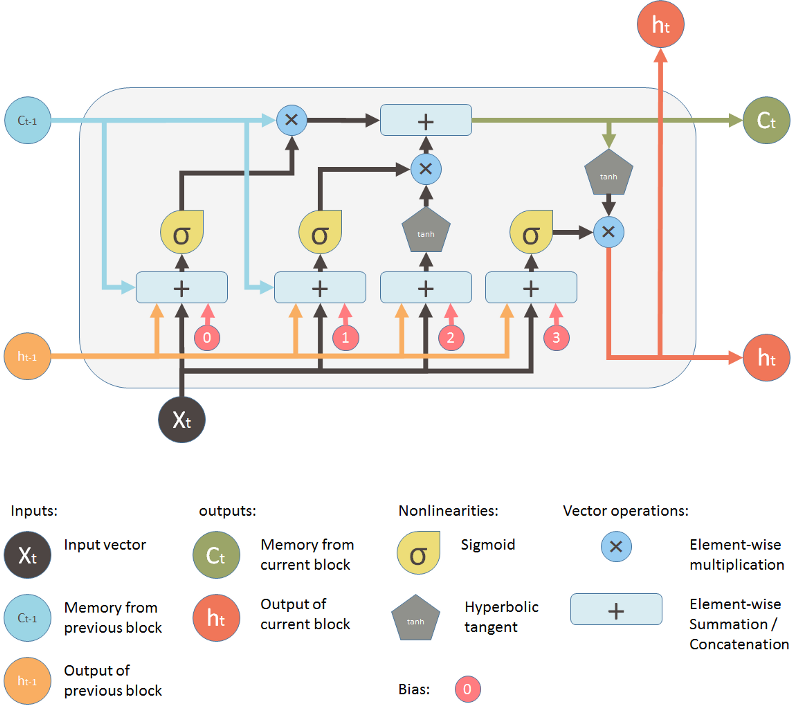

This problem is known as a sequence to sequence learning problem. To tackle the same, one can say (just by intuition) that one would require a neural network with memory - a recurrent neural network (RNN). This so, because the network must be able to figure out the relation of the input words with the neighboring words in the sequence. Thus, a sequence to sequence problem is formulated as an encoder network (an RNN) cascaded with a decoder network (another RNN). The job of the encoder network is to interpret the information from the input sequence, essentially the meaning it is trying to convey. The decoder then conveys the meaning into the other sequence. So, in the translation problem, the encoder network extracts the important information from the English sentence and the decoder reads that information to produce the french sentence. The neurons which are generally used are either gated recurrent units (GRUs) or long-short term memory units (LSTMs). An LSTM unit is very interesting neuron which summarizes our process of ‘thinking’. This explained brilliantly in this blog post by . The basic architecture of the neuron is:

So, in the translation problem, the encoder network extracts the important information from the English sentence and the decoder reads that information to produce the french sentence. The neurons which are generally used are either gated recurrent units (GRUs) or long-short term memory units (LSTMs). An LSTM unit is very interesting neuron which summarizes our process of ‘thinking’. This explained brilliantly in this blog post by . The basic architecture of the neuron is:

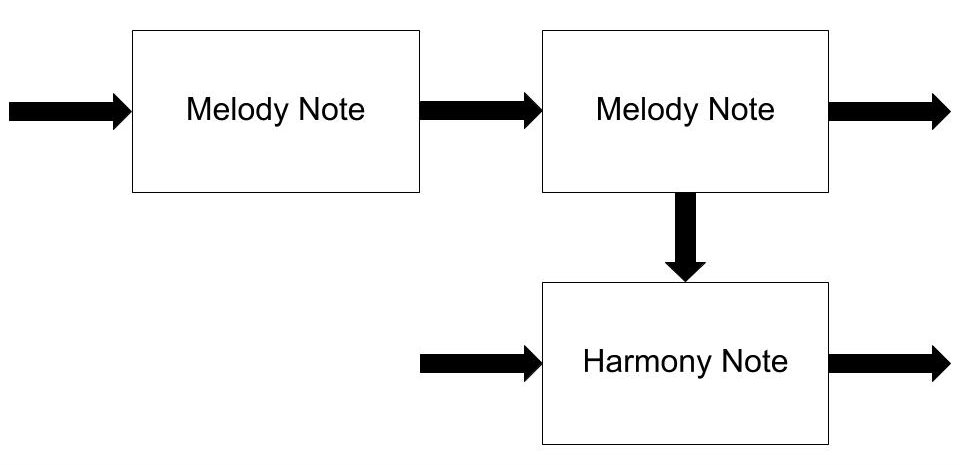

Now, let’s jump back to music again. It is often said that music is a language, and this was the inspiration for me and a few other friends (including Shubh) to come up with a system which harmonizes music. For those of you who are less musically inclined - each song can be thought of as having a lead melody and a harmony (sometimes more than one as well), and these need not be the same. Restricting ourselves to piano music (western classical), usually the case is that the melody runs in the treble clef and the harmony runs in the base clef. (Before reading further, do familiarize yourself with a bit of music theory) Needless to say, the harmony line is determined by the melody. Not every note/chord ‘goes with’ every other. The fundamental task, hence, is to learn which notes/chords go well with which others. Not only that the melody line itself is dependent on previous patterns (e.g. rhythm, the number of beats, scale etc.). So, modeling the melody and the harmony lines as sequences of notes, the problem can be represented as:

Doesn’t this look familiar? Of course, this is the sequence to sequence learning problem I described earlier.

Currently, I and others are working on this project. I will update this post regarding our method and about the other important aspects of NLP like the word2vec module by mid-November. Stay tuned.